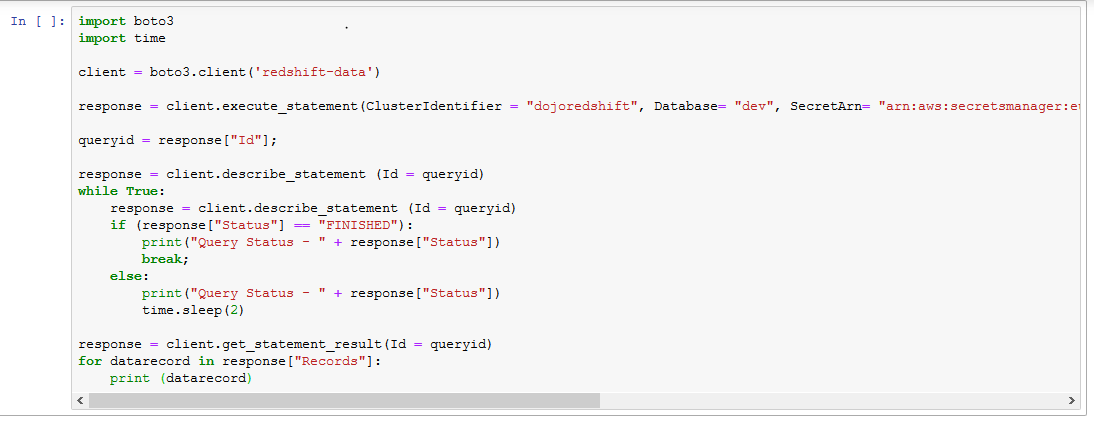

I'm using CSV files for most of the test runs because I faced a limitation of 4MB while dealing with JSON formats. I am attempting to do so using the Redshift-Data API, which states that it supports parameterized queries. The format of the file which you are trying to copy also plays an important role in fixing the issues related to the COPY command. I am issuing a COPY command to connect to S3 and load data into Redshift. Solution 2.2: First COPY all the underlying tables, and then CREATE VIEW on Redshift. Solution 2.1: Create views as a table and then COPY, if you don't care its either a view or a table on Redshift.

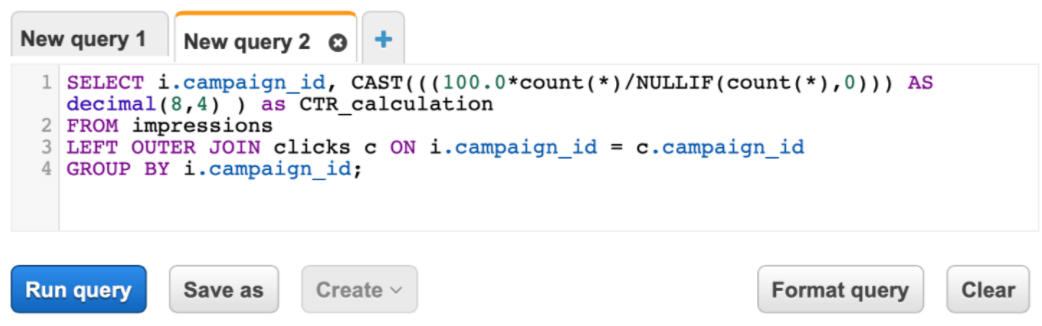

Views cannot be loaded with the COPY command. In case this data is already in Redshift, the COPY command creates duplicate rows. ETL: Redshift has a COPY command which is used to load data. A more detailed look into pricing can be found here. _: Invalid tableĬontext: ************ is not a table. You can start at 0.25 per hour and scale up to your needs. I have tried copying views directly to Redshift from S3, which resulted in below error: Problem 2: Redshift doesn't support materialized views. Solution 1.2: Using ESCAPE, and remove quotes should work as well Solution 1.1: As the issue is with comma as a delimiter, I have specified the file format as CSV and ignored the headers. As we know the delimiter for any CSV file will be comma separators, but the problem on keeping this as a delimiter is that it will fail with below error if the data also have commas in it.Įrror Code: 1213, Error Description: Missing data for not-null fieldĪWS Documentation link that helped in solving this issue: airflow related from airflow import DAG from import PythonOperator from import BashOperator other packages from datetime import datetime from datetime. S3ToRedshiftTransfer is can be used to do the same. I even added the COMPUPDATE OFF parameter. I was able to use the boto3 to execute copy from s3 to redshift. Column names in Amazon Redshift tables are always lowercase, so when you use the ‘auto’ option, matching JSON. Problem 1: Faced issue with delimiters on CSV file. I tried to use the COPY command to load data into a new table in Amazon Redshift. COPY maps the data elements in the JSON source data to the columns in the target table by matching object keys, or names, in the source name/value pairs to the names of columns in the target table. I'm writing this post to log all errors related to the COPY command that I faced which might help others in saving their time.Ĭopy command that I have used for a while: Make sure the schema for Redshift table is created before running your COPY command. We use s3 COPY command to move data from S3 to Redshift table.

0 kommentar(er)

0 kommentar(er)